Introduction

In the realm of computer science, optimizing memory consumption and computational efficiency is paramount. This is where Sparse Matrix (Data Structures) come into play. By efficiently representing matrices with a significant number of zero values, these data structures offer a revolutionary way to save memory and expedite operations. This article delves into the intricacies of What is Sparse Matrix in Data Structures, exploring their applications, benefits, and practical implementation methods.

What is Sparse Matrix in Data Structures? Defined

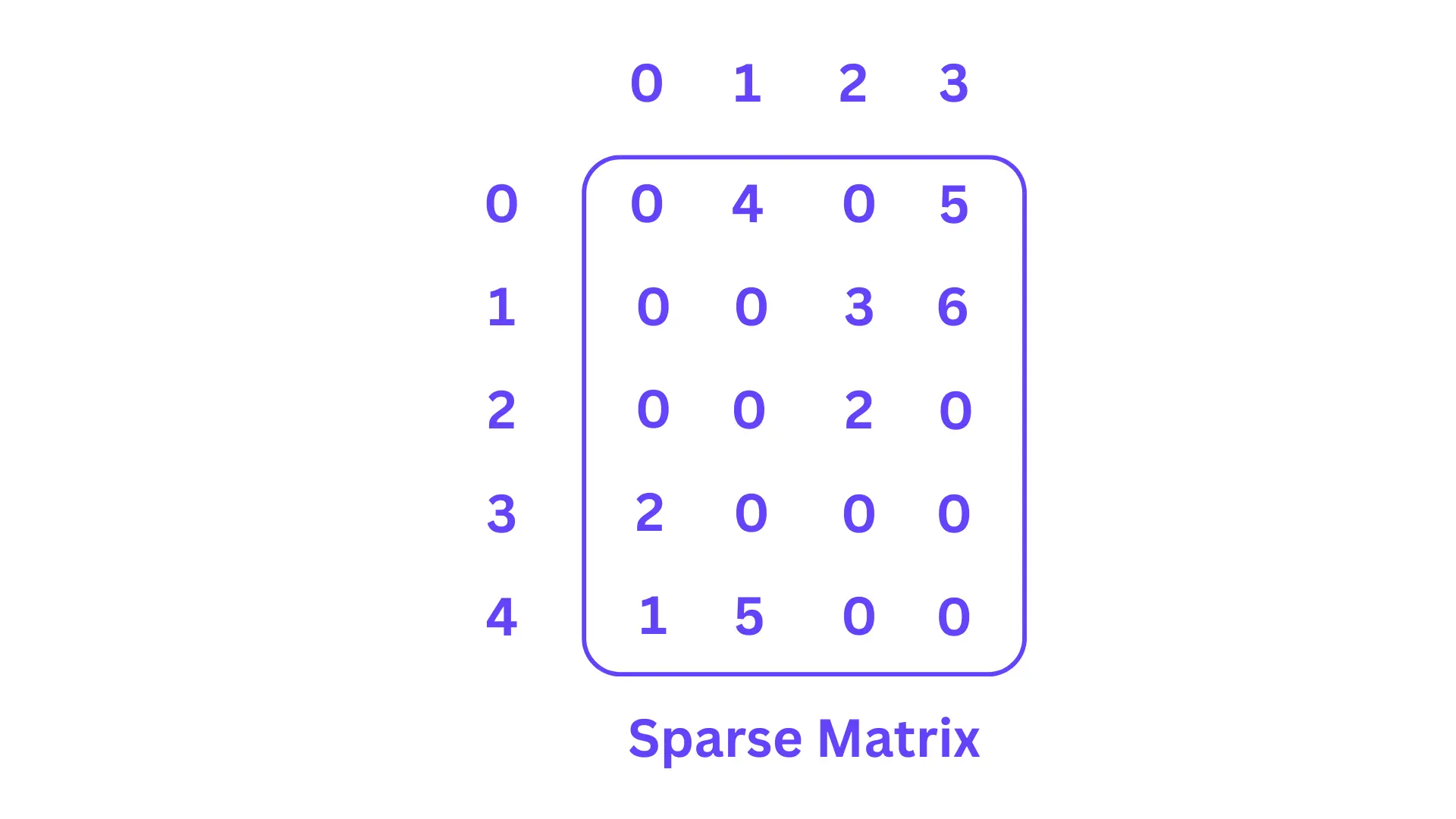

Sparse Matrix, as the name suggests, refers to a matrix wherein most of the elements are zero. These matrices frequently arise in various domains such as scientific simulations, image processing, and network analysis. Representing these matrices using traditional methods can be inefficient in terms of memory consumption and computation time. Sparse Matrix (Data Structures) provide a solution by storing only the non-zero values along with their respective row and column indices.

Advantages of Sparse Matrix (Data Structures)

Sparse Matrix (Data Structures) offer a multitude of advantages that make them indispensable in various applications:

- Reduced Memory Footprint: Traditional matrix representations allocate memory for all elements, leading to wastage in the case of sparse matrices. Sparse Matrix (Data Structures) optimize memory usage by storing only non-zero values, resulting in substantial memory savings.

- Faster Computations: Performing operations on sparse matrices using traditional methods involves unnecessary computations with zero elements. Sparse Matrix (Data Structures) streamline calculations by ignoring zero values, leading to faster operations.

- Enhanced Performance: In scenarios where matrix operations are critical, such as scientific simulations or machine learning algorithms, the use of sparse matrices significantly enhances performance, allowing for quicker analysis and decision-making.

- Economical Storage: Storing and transmitting sparse matrices become more economical due to reduced data size. This is particularly advantageous in applications dealing with large datasets or network communications.

Applications of Sparse Matrix (Data Structures)

Sparse Matrix (Data Structures) find applications in diverse fields, showcasing their versatility and impact:

- Image Processing: In image-related operations like edge detection and image transformations, where most pixel values are zero, sparse matrices optimize memory and processing speed.

- Network Analysis: Sparse matrices excel in representing connectivity data in networks, enabling efficient analysis of relationships between nodes in graph-based structures.

- Scientific Simulations: Numerical simulations involving partial differential equations and finite element analysis often result in sparse matrices. Using Sparse Matrix (Data Structures) accelerates these computations.

- Natural Language Processing: In tasks like text analysis and sentiment classification, where the term-document matrix is often sparse, these structures aid in efficient textual data processing.

Implementing Sparse Matrix (Data Structures)

Creating and utilizing Sparse Matrix (Data Structures) involves several key steps:

- Choosing the Right Representation: Common representations include Compressed Sparse Row (CSR) and Compressed Sparse Column (CSC) formats. The CSR format stores row indices, column pointers, and non-zero values, while the CSC format stores column indices, row pointers, and non-zero values.

- Construction: During matrix creation, non-zero elements are identified, and their values, along with their corresponding row and column indices, are stored in the chosen representation.

- Matrix Operations: Implementing operations like matrix-vector multiplication or matrix addition involves considering the structure of sparse matrices and optimizing calculations accordingly.

- Efficient Algorithms: Various algorithms, such as the Conjugate Gradient method for solving linear systems, leverage the sparsity of matrices to achieve faster convergence.

Frequently Asked Questions (FAQs)

Q: What is the primary advantage of using Sparse Matrix (Data Structures)?

A: The key advantage is reduced memory consumption due to the storage of only non-zero values, resulting in efficient memory usage and faster operations.

Q: Can I convert any matrix into a sparse matrix?

A: Sparse Matrix (Data Structures) are best suited for matrices with a significant number of zero values. Converting a matrix with very few zeros may not yield substantial benefits.

Q: How do I choose between CSR and CSC formats?

A: The choice depends on the specific application and the type of operations you plan to perform. CSR is often preferred for efficient row-based operations, while CSC is suitable for column-based operations.

Q: Are there libraries available for working with sparse matrices?

A: Yes, various programming languages offer libraries like SciPy in Python and Eigen in C++ that provide robust support for creating, manipulating, and performing operations on sparse matrices.

Q: Can sparse matrices be directly used in machine learning algorithms?

A: Yes, many machine learning libraries accommodate sparse matrices, allowing you to utilize them seamlessly in algorithms like Support Vector Machines and Logistic Regression.

Q: What challenges might arise when working with sparse matrices?

A: Balancing memory consumption, efficient representation selection, and implementing specialized algorithms for sparse matrix operations can be challenging.